Federated Learning for Privacy-Preserving Alzheimer's Disease Classification

MSc dissertation investigating privacy-preserving federated learning for Alzheimer's Disease classification using 3D MRI data from ADNI, introducing a novel Adaptive Local Differential Privacy mechanism.

Overview

This MSc dissertation investigates privacy-preserving federated learning for Alzheimer's Disease (AD) classification using 3D MRI data from the Alzheimer's Disease Neuroimaging Initiative (ADNI). The research addresses critical gaps in existing methodologies: unrealistic data partitioning, inadequate privacy guarantees, and insufficient benchmarking for practical healthcare deployment.

Problem Statement

Alzheimer's Disease affects millions worldwide, and early detection through MRI analysis is crucial for intervention. However, medical imaging data is subject to strict privacy regulations (HIPAA, GDPR), and centralised machine learning requires aggregating patient data—posing significant privacy risks. Traditional federated learning approaches face fundamental limitations:

- Artificial data partitioning that mixes samples from different institutions, obscuring real-world non-IID conditions

- Fixed-noise differential privacy that causes severe performance degradation, with training loss often diverging

- Insufficient privacy-utility trade-offs that limit clinical applicability

Novel Contributions

Adaptive Local Differential Privacy (ALDP)

The primary methodological innovation is the ALDP mechanism, which addresses limitations of fixed-noise differential privacy through two complementary innovations:

Temporal Privacy Budget Adaptation:

The privacy budget grows exponentially across training rounds:

Where is the privacy budget at round , is the initial budget, and controls the rate of budget expansion. The budget is bounded:

**Gaussian Noise Scale for -DP:**

Where (clipping norm) serves as the sensitivity bound.

Per-Tensor Variance-Aware Noise Scaling:

Where is the standard deviation of tensor , and is the mean standard deviation across all tensors. The final noisy parameters are:

This dual adaptation enables formal privacy guarantees while significantly improving model utility compared to fixed-noise approaches.

Site-Aware Data Partitioning

A novel evaluation methodology that preserves institutional boundaries during federated learning simulation:

- Maintains natural multi-site heterogeneity from ADNI data collection

- Uses greedy load-balancing to ensure approximately equal sample distribution

- Enables realistic assessment of multi-institutional collaboration challenges

Technical Implementation

Data Pipeline

- Processed 797 3D T1-weighted MRI scans from ADNI database (490 CN, 307 AD)

- Preprocessing with ANTs (spatial normalisation to MNI template) and FSL (skull stripping via BET)

- Held-out balanced test set of 100 subjects (50 CN, 50 AD)

- Comprehensive data augmentation using MONAI (geometric transforms, elastic deformations, MRI-specific intensity augmentations)

Model Architecture

- 8-layer 3D CNN with progressive channel expansion [8→8→16→16→32→32→64→64]

- GroupNorm layers for differential privacy compatibility

- AdamW optimiser with cosine annealing learning rate scheduling

Weighted Cross-Entropy Loss to handle class imbalance:

Where class weights are computed using inverse frequency:

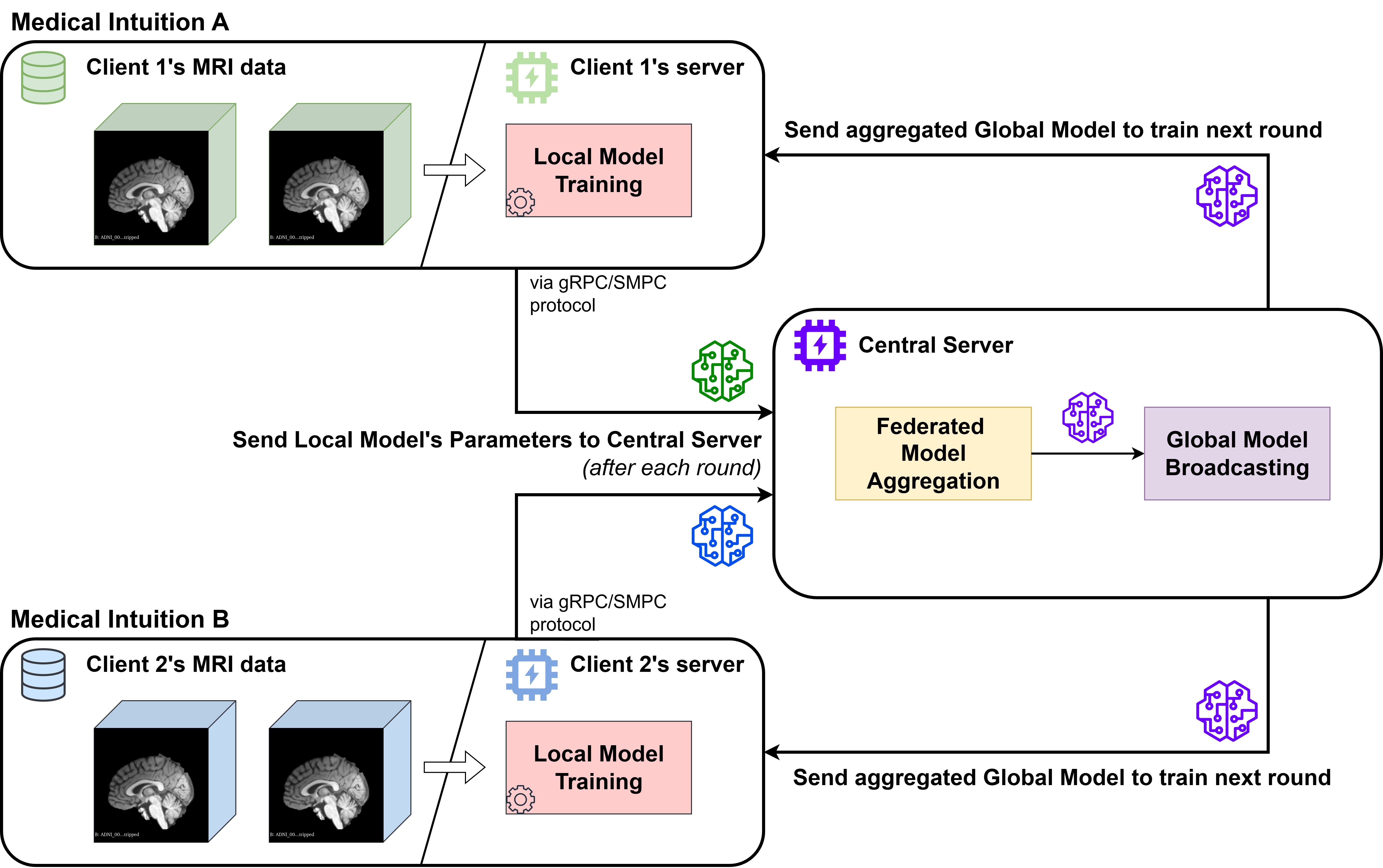

Federated Learning Strategies

FedAvg: Baseline federated optimisation aggregating client updates via weighted averaging.

FedProx: Adds proximal regularisation to handle client heterogeneity. Each client minimises:

Where is the proximal regularisation parameter and is the global model at round .

SecAgg+: Cryptographic secure aggregation with parameter quantisation.

Local DP & ALDP: Privacy-preserving mechanisms with formal -differential privacy guarantees.

Key Results

Federated vs Centralised Performance

| Strategy | Clients | Accuracy | F1 Score |

|---|---|---|---|

| Centralised | -- | 80.2±2.2% | 79.7±2.5% |

| FedProx | 2 | 80.4±2.3% | 80.1±2.4% |

| FedProx | 3 | 81.4±3.2% | 81.3±3.2% |

| FedAvg | 4 | 78.6±3.4% | 78.1±3.7% |

Privacy-Preserving Performance (ALDP vs Fixed DP)

| Privacy Budget (ε) | Fixed DP Accuracy | ALDP Accuracy | Improvement |

|---|---|---|---|

| 500 | 70.2±4.5% | 75.6±3.9% | +5.4pp |

| 1000 | 72.0±4.9% | 78.4±3.3% | +6.4pp |

| 2000 | 75.6±4.6% | 80.4±0.8% | +4.8pp |

Clinical Impact

- FedProx improved AD sensitivity from 64% (centralised) to 74%—a clinically vital improvement for early detection

- Ablation study showed individual clients achieved 68-75% accuracy vs 81.4% through federation

- Training time overhead of 22-38% compared to centralised training

Significance

This research demonstrates that federated learning, when implemented with realistic methodological design and adaptive privacy-preserving techniques, can achieve clinically superior diagnostic performance while maintaining rigorous privacy guarantees. The ALDP mechanism and site-aware partitioning strategy establish methodological foundations for privacy-compliant AI adoption in healthcare.

Key Achievements

- Developed novel Adaptive Local Differential Privacy (ALDP) mechanism combining temporal privacy budget scheduling with per-tensor variance-aware noise scaling

- ALDP achieved 80.4±0.8% accuracy, surpassing both fixed-noise DP (by 5-7pp) and the non-private centralised baseline (78.6%)

- FedProx achieved 81.4±3.2% accuracy in 3-client configuration, exceeding centralised training while improving AD sensitivity from 64% to 74%

- Introduced site-aware data partitioning strategy preserving institutional boundaries for realistic federated learning evaluation

Technologies Used

Skills Applied

Project Details

Role

Researcher

Team Size

Individual (supervised by Prof. Gustavo Carneiro)

Duration

Apr 2025 - Sep 2025

Links

Want to know more about this project?

Ask my AI